Create a split test

Test your campaign content with several variants

Split testing involves creating variants of your message that will be sent to a percentage of the campaign audience. The results can then be analysed to determine which variant was more successful in accomplishing your goals. This winning variant can then be sent to the remaining percentage of the campaign audience or used to inform future campaigns.

How does it work?A variant or control group of 30% does not mean that exactly 30% of the users will be in the variant or control group. Instead each individual user has a 30% chance to be added to that variant or control group.

For small sends this will result in high variance. For example, a send to 3 users with a 30% control could result in no users entering the control or all users entering it. However for sends targeting 1,000+ users the variance should be minimal.

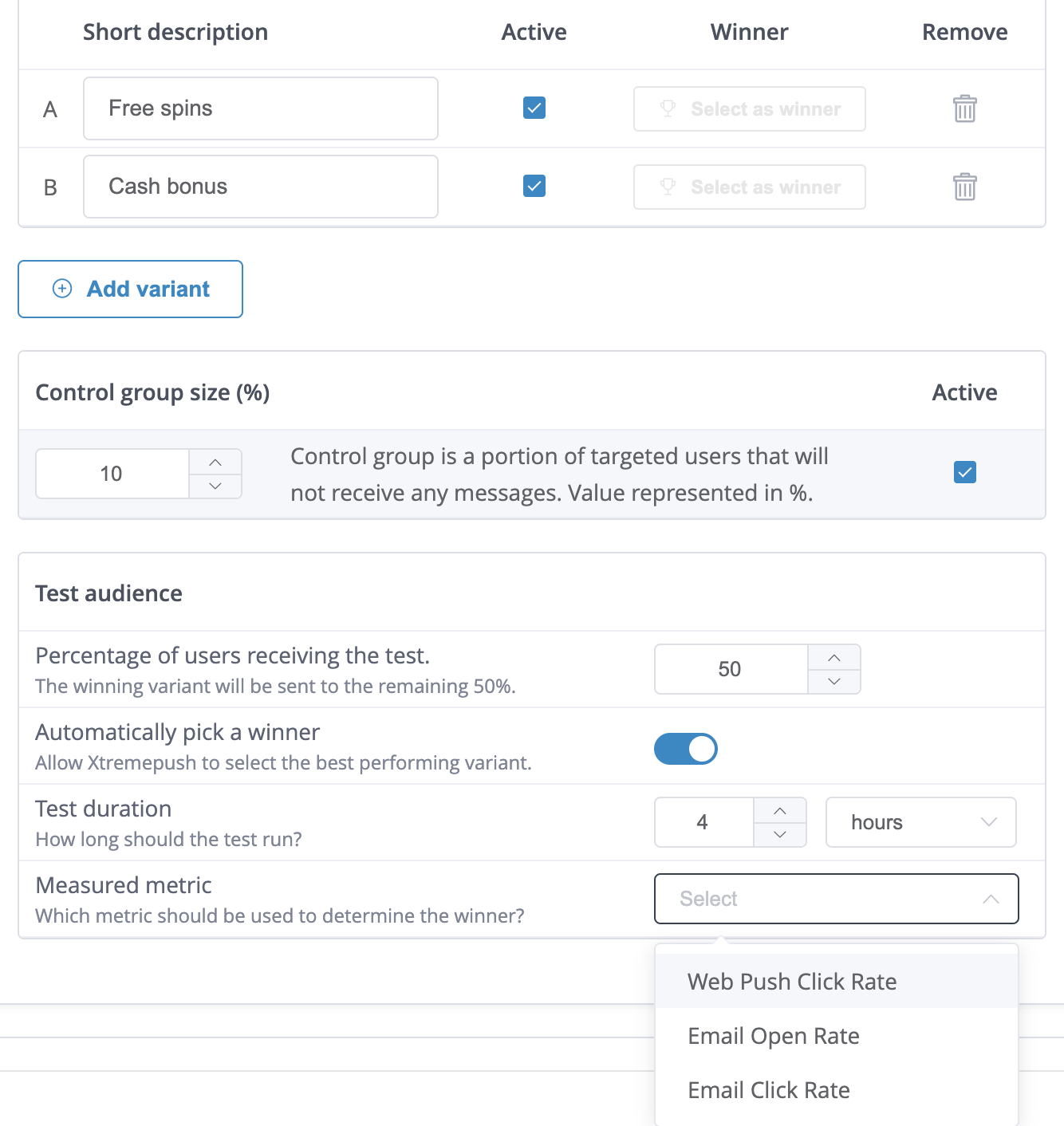

Add a new message variant by clicking the Add variant button. Enter a description for each message variant in the Short description input and toggle each variant active or inactive based upon which ones you wish to run at any given time.

Variant limitIt's not recommended to set up more than 7 variants.

Control Group

If required, you can define the size of a control group by entering a percentage figure in the Control group size input field. This portion of the campaign's targeted users will not receive any messages.

Using a control group allows you to measure user activity between the audience that received a message and that which didn't, giving you a direct comparison of the influence gained by sending a specific message to your users.

Random allocation

By default, user profiles retain the same variant they received during the initial sending, as allocation remains consistent in subsequent sends. This includes the Control Group Random Allocation lets users receive a random variant (including the control group) in each send.

Variant percentage split

By default, the audience is initially split equally between variants. To set different percentages for each variant, enable the Variant Percentage Split toggle.

Do the mathThe sum of percentages set up on variants when using the Variant Percentage Split option must equal 100%.

Test Audience

Percentage of users receiving the test

Set a percentage of your campaign audience that will be sent the initial test. This can be anything from 1% to 100%. You should consider making sure the test audience is large enough to get some meaningful results. For example, setting a 10% test audience on a campaign that already has a small audience is not likely to give trustworthy results. If you want the whole campaign to be a test which will inform future campaign segmentation decisions, and not to send the winner in the current campaign, then you could consider setting up a 100% test audience and make sure everyone is included in the test.

Triggered campaignsTriggered campaigns (such as API or event-triggered campaigns) do not allow setting a percentage.

Every recipient for whom the campaign is triggered will be sent a random variant. Once the time that is set in the test duration elapses, a winner will be selected. At this point, only the winner variant will be sent to all users triggering the campaign (including those who may have triggered it before and been sent a different variant to the winning one).

Control groups and test audiencesAny control group set will be taken into consideration for the test audience size. If a test audience of 10% is set on a campaign with a starting audience of 1000 users, then the size of the test would be 100 users.

However, if there is also a 20% control group set up on this campaign then around 20 of those users will be included in the Control Group (and won't receive any campaign), and the remaining users (around 80) will be the test audience.

Automatically pick a winner

It is possible to leave this option off and run a test manually. The Xtremepush user is required to return to the campaign, view and make a decision on the engagement so far, and select a winner by clicking the select as winner button next to one of the variants. If however, the option for Xtremepush to select the winner automatically is enabled then two more options become available. They allow the user to set the rules for when and how a winner should be selected.

Test Duration

Set a length of time for the test to run. Once this period elapses, a winner variant will be selected. For example, setting a test time of 24 hours for a campaign due to start at 9 am on a Monday, will select and send the winner at 9 am on the following Tuesday.

The test length should be set to something appropriate for the campaign. Give recipients long enough to receive and engage with the messages, but not too long that the message will be out of date once it is sent to the remaining audience.

Measured Metric

In order for Xtremepush to determine the winner, it is important to set how the decision is to be made. Any channel which is enabled for the campaign (and has a trackable metric) will be displayed in this selection. For example, adding email to the campaign will then allow for the selection of email opens and email clicks as a metric to check and determine the winner. Only one metric can be selected.

Setting the WinnerXtremepush variants are connected across all channels. Therefore, once the winner is determined, the winning variant will be set across all channels, regardless of the specific channel metric used to determine the winner. For this reason, we advise that variants have a common theme/offer across all channels. For example :

Variant A (across all channels): Free item offer

Variant B (across all channels): Money back offer

Test compatibility by campaign type

The ability to automatically pick a winner is not available to all campaign types. Below is a table which shows a quick reference to which options are available for each campaign type.

| Campaign Type | Manual Winner | Automatic Winner | Percentage Test |

|---|---|---|---|

| Single Stage Sends (Scheduled) | Yes | Yes | Yes |

| Single Stage Sends (Triggered) | Yes | Yes | No |

| In App / On Site | Yes | No | No |

| Multi-stage journeys (Scheduled) | No | No | No |

| Multi-stage journeys (Triggered) | Yes | No | No |

| Optimove triggered campaigns | Yes | No | No |

| Salesforce MC | Yes | No | No |

Analyse test and winning variant engagement

Winning variants are indicated with a trophy icon next to them. This can be seen both in the Setup tab for the campaign itself and on the analytics page for the campaign.

When a winner is selected, it is copied to a new variant, which is then used as the sent variant going forward. This makes it clear to see all engagement details for the test variants up to the point of a winner being selected, and for the winning variant from that point onwards.

Example

Below is an example of how this could look:

In this example, two variants are configured for a scheduled web push and email campaign.

- A control group of 10% has been set.

- A test audience of 50% has been set.

- The campaign will automatically pick a winner.

- The test will last for 4 hours, after which the system will pick a winner based on the metric selected.

- The audience of this campaign is of 1000 users.

100 users will be in the control group, and they won't be sent anything. Out of the remaining 900 users, 50% are assigned to the variant test (450), so ∼225 will receive variant A and ∼225 will receive variant B randomly.

After 4 hours, the system will determine which variant is the winner. This variant will now be copied to a new variant, and it will be sent to the remaining 450.

Updated 4 months ago